摘 要

本课题的主要目的是设计面向定向网站的网络爬虫程序,同时需要满足不同的性能要求,详细涉及到定向网络爬虫的各个细节与应用环节。

搜索引擎作为一个辅助人们检索信息的工具。但是,这些通用性搜索引擎也存在着一定的局限性。不同领域、不同背景的用户往往具有不同的检索目的和需求,通用搜索引擎所返回的结果包含大量用户不关心的网页。为了解决这个问题,一个灵活的爬虫有着无可替代的重要意义。

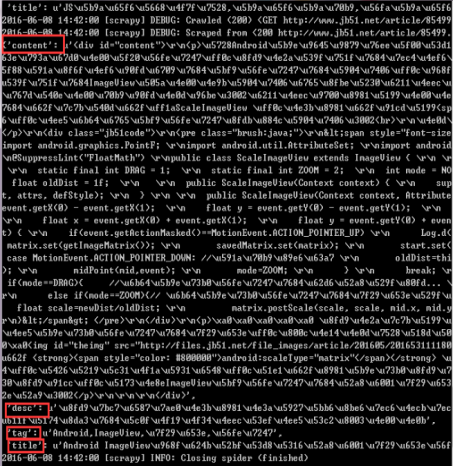

网络爬虫应用智能自构造技术,随着不同主题的网站,可以自动分析构造URL,去重。网络爬虫使用多线程技术,让爬虫具备更强大的抓取能力。对网络爬虫的连接网络设置连接及读取时间,避免无限制的等待。为了适应不同需求,使网络爬虫可以根据预先设定的主题实现对特定主题的爬取。研究网络爬虫的原理并实现爬虫的相关功能,并将爬去的数据清洗之后存入数据库,后期可视化显示。

关键词:网络爬虫,定向爬取,多线程,Mongodb

ABSTRACT

The main purpose of this project is to design subject-oriented web crawler process, which require to meet different performance and related to the various details of the targeted web crawler and application in detail.

Search engine is a tool to help people retrieve information. However, these general search engines also have some limitations. Users in different fields and backgrounds tend to have different purposes and needs, and the results returned by general search engines contain a large number of web pages that users don't care about. In order to solve this problem, it is of great significance for a flexible crawler.

Web crawler application of intelligent self construction technology, with the different themes of the site, you can automatically analyze the structure of URL, and cancel duplicate part. Web crawler use multi-threading technology, so that the crawler has a more powerful ability to grab. Setting connection and reading time of the network crawler is to avoid unlimited waiting. In order to adapt to the different needs, the web crawler can base on the preset themes to realize to filch the specific topics. What’s more, we should study the principle of the web crawler ,realize the relevant functions of reptiles, save the stolen data to the database after cleaning and in late achieve the visual display.

Keywords:Web crawler,Directional climb,multi-threading,mongodb

目 录

第一章 概述 1

1.1 课题背景 1

1.2 网络爬虫的历史和分类 1

第二章 文献综述 7

2.1 网络爬虫理论概述 7

2.2 网络爬虫框架介绍 8

第三章 研究方案 16

3.1 网络爬虫的模型分析 16

3.2 URL构造策略 19

3.3 数据提取与存储分析 19

第四章 网络爬虫模型的设计和实现 21

4.1 网络爬虫总体设计 21

4.2 网络爬虫具体设计 21

第五章 实验与结果分析 39

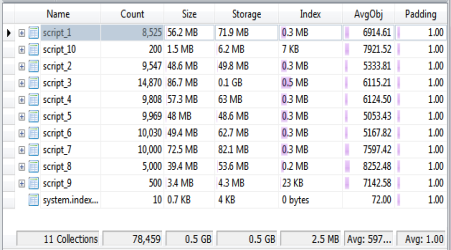

5.2 结果分析 42

参考文献 36

致谢 37

附录1 38

附录2 47

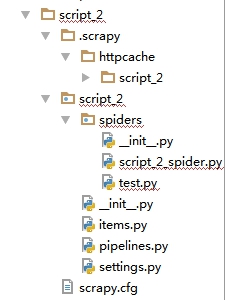

1)爬虫代码文件构成如图: