摘 要

大规模图数据处理已经成为大数据时代的一个重要组成部分,无论是在社交网络,还是在Web应用、生物信息网络等场景中都有所涉及。图计算系统的研究,也因此成为了高性能计算领域的热点。随着通用GPU技术的发展,GPU被用来加速图数据的处理成为了一种趋势。然而通过调研发现,当前的GPU图计算系统以同步处理模型为基础,这种处理模型需要在每次迭代执行之后,同步所有顶点的更新数据,然而由于数据划分不均衡,导致同步过程开销巨大,也减缓了图算法的收敛速度,同时不能高效利用GPU的并行计算能力。

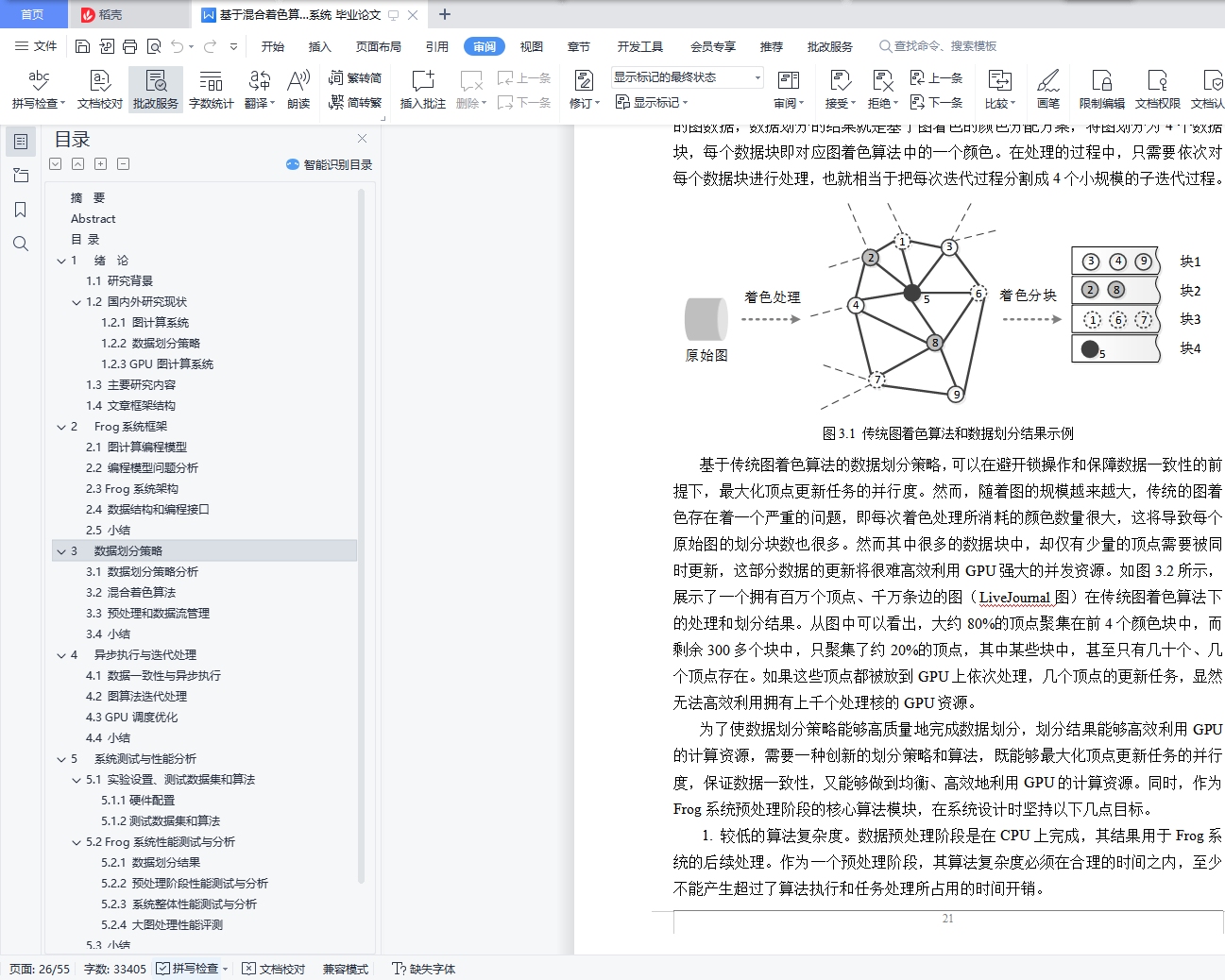

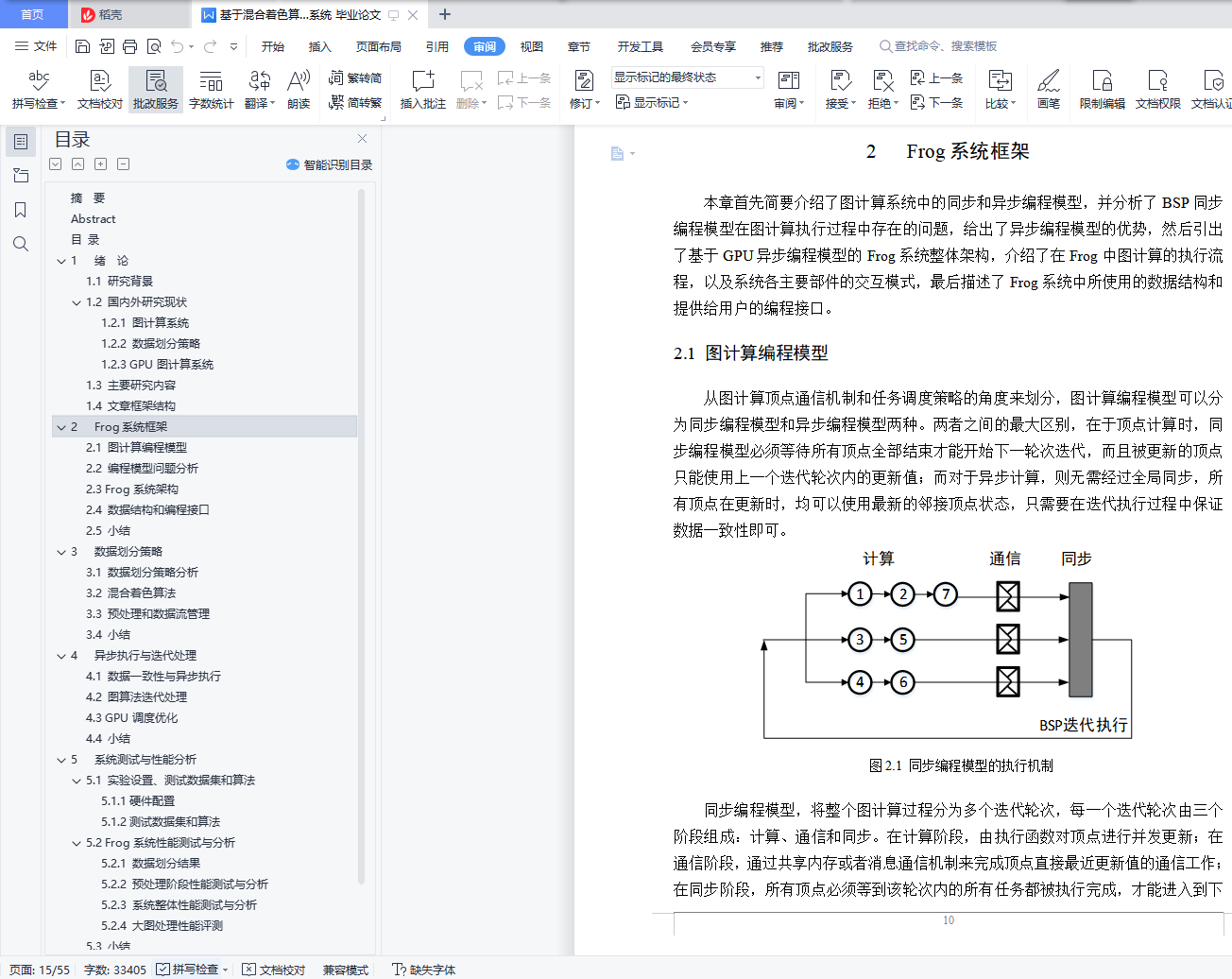

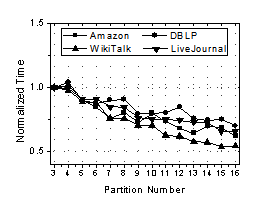

采用异步处理模型的GPU图计算系统Frog,解决了迭代过程同步开销大、GPU并行计算能力受限、算法收敛速度慢的问题。首先,Frog采用异步处理模型,在保证异步处理过程中数据一致性的同时,避免了当前系统中存在的同步通信开销;其次,Frog采用基于混合着色算法的数据划分策略,通过对所有顶点进行混合着色处理,整合计算任务少的顶点,保证在GPU的每个更新窗口中都有足够的计算任务在执行,从而提高GPU的并发资源利用率;最后,Frog通过加快迭代计算过程中消息的传递速度,从而使所有顶点都能够访问到最新的更新值,进而加快图算法的收敛速度。此外,Frog通过对超出GPU内存的图进行分块处理,保证每个数据块都能够适应GPU内存大小,从而有效地处理超大规模的图数据。

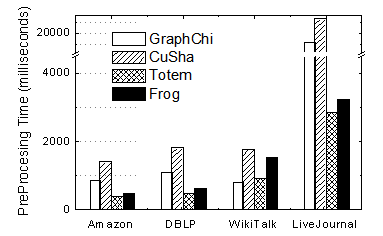

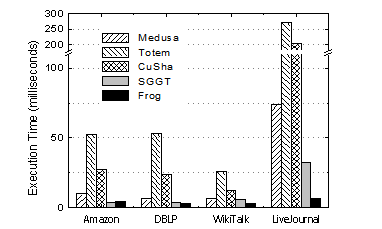

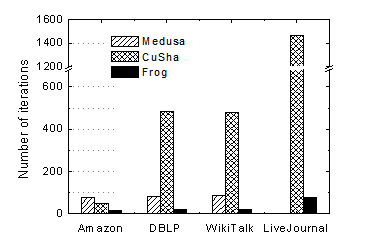

Frog以CUDA语言为基础进行编程设计,并提供了开放的API接口,使得用户可以方便地进行GPU编程。实验表明,Frog比现有的GPU图计算系统能够获得3.2倍以上的性能提升。此外,Frog系统还能够处理现有的GPU图计算系统无法处理的、超出GPU设备内存的大规模图数据,比现有的CPU图计算系统能够获得5.3倍以上的性能提升。

关键词:图计算,GPU,数据划分,异步处理模型

Abstract

Large-scale graph computing has become an important part of big data processing. It can be found whether in the social networks, Web applications, or biological information networks. And research on graph processing systems becomes one of the hot fields of High Performance Computing (HPC). With the development of GPGPU technology, it becomes a trend that GPU is used to accelerate the graph processing. However, current GPU based graph computing systems are developed based on the synchronous processing model, which needs a barrier to complete the data synchronization after each iteration of the execution. These systems cannot make full use of GPU computing resources because of the unbalanced data partitioning. And the overhead of data synchronization is huge, which also slows the convergence speed of graph algorithms.

The graph computing system Frog is based on the asynchronous processing model. Frog does not need the data synchronization barrier. And Frog solves the problem that synchronous systems cannot make full use of GPU computing resources and slow the convergence speed of graph algorithms. Firstly, Frog uses asynchronous model to avoid the overhead of data synchronization barrier and ensures the graph processing consistency at the same time. Secondly, Frog adopts a novel data partitioning strategy, which colors all vertices of the input graph and assigns some vertices into the hybrid chunk to ensure enough computing tasks for each GPU kernel. So Frog can improve the utilization of GPU parallel computing resources. Finally, Frog accelerates the transfer speed of messages of updated vertices. As all vertices are able to access to the latest updates inside the current iteration, Frog accelerates the convergence speed of graph algorithms. In addition, by dividing graphs into a number of sub graphs adapted to the memory size, Frog can process graphs with the scale out of the GPU device memory.

We design the system Frog based on the CUDA programming framework and provide some open APIs so that users can conveniently complete the graph processing on Frog. Experiments based on real-world graphs show that Frog outperforms other state-of-the-art approaches by over 3.2X. And for out-of-GPU-memory graphs that cannot be processed by other systems, Frog achieves a speedup of 5.3X than other CPU-based graph computing systems.

Key words: graph computing, GPU, data partitioning, asynchronous processing model

目 录

摘 要 I

Abstract II

1 绪 论

1.1 研究背景 (1)

1.2 国内外研究现状 (3)

1.3 主要研究内容 (7)

1.4 文章框架结构 (8)

2 Frog系统框架

2.1 图计算编程模型 (10)

2.2 编程模型问题分析 (12)

2.3 Frog系统架构 (14)

2.4 数据结构和编程接口 (17)

2.5 小结 (19)

3 数据划分策略

3.1 数据划分策略分析 (20)

3.2 混合着色算法 (22)

3.3 预处理和数据流管理 (26)

3.4 小结 (27)

4 异步执行与迭代处理

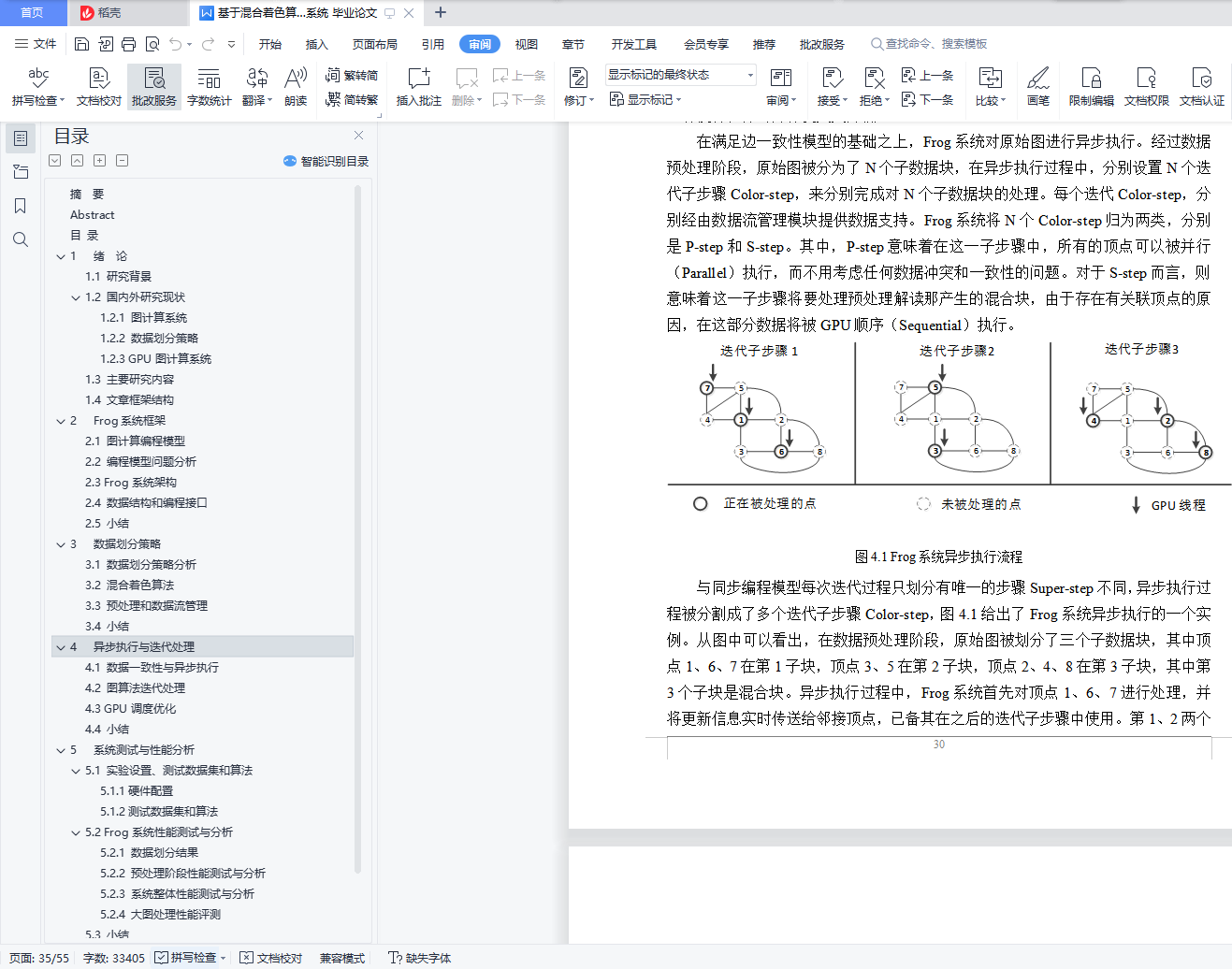

4.1 数据一致性与异步执行 (29)

4.2 图算法迭代处理 (31)

4.3 GPU调度优化 (33)

4.4 小结 (34)

5 系统测试与性能分析

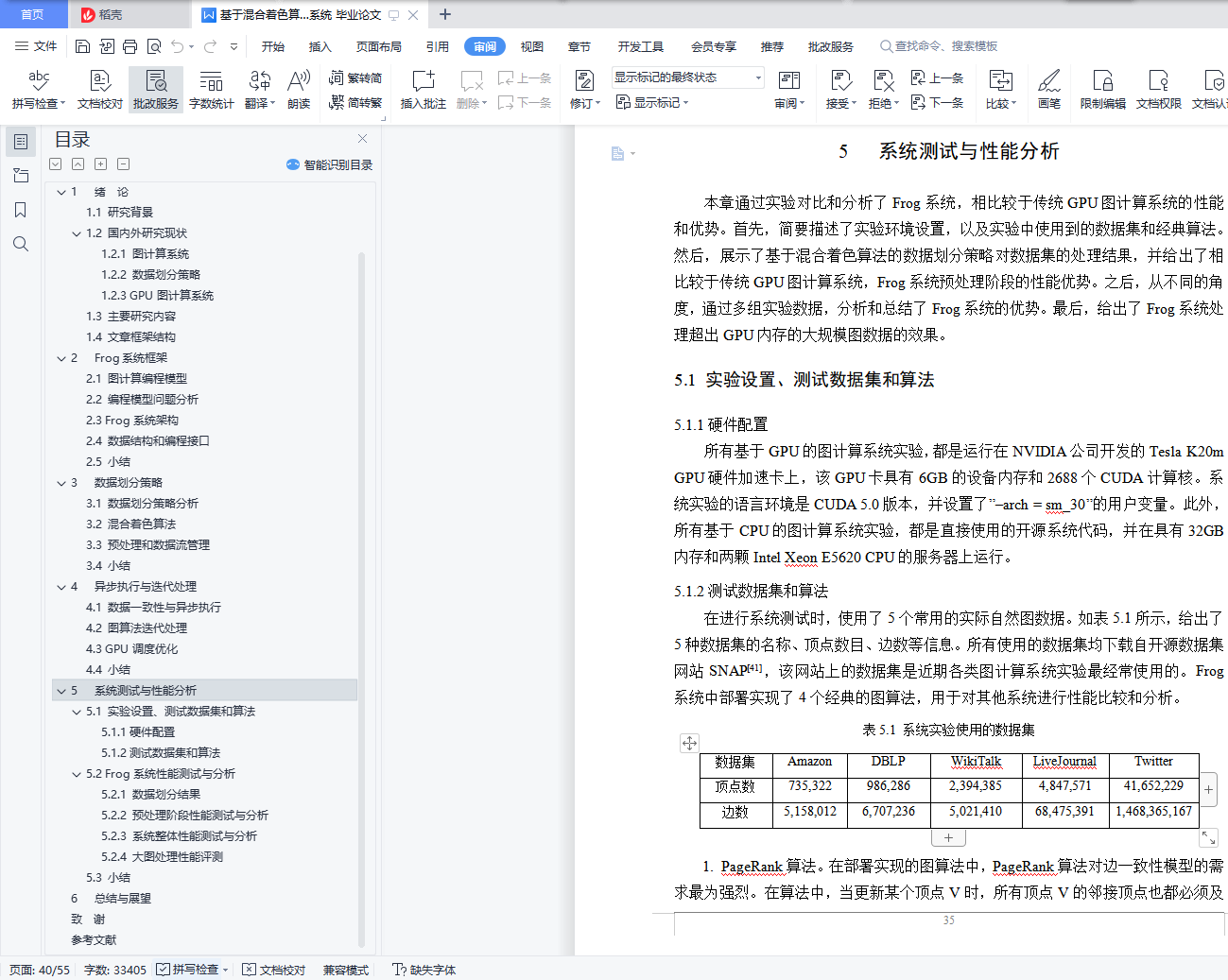

5.1 实验设置、测试数据集和算法 (35)

5.2 Frog系统性能测试与分析 (36)

5.3 小结 (42)

6 总结与展望 (43)

致 谢 (45)

参考文献 (47)